FRL| Future Realities Research Lab

Advancing the Future of

Digital Realities

Research Areas

Our interdisciplinary research combines cutting-edge technology with human-centered design to push the boundaries of what's possible in future realities.

Workforce Development

Developing VR and AR solutions to enhance professional training, skill acquisition, and workplace readiness across various industries.

Read More

Read More AI Literacy

Integrating artificial intelligence with virtual reality environments to create personalized, adaptive literacy learning experiences.

Read More

Read More Immersive Technologies in Family Interventions

Researching how digital and immersive technologies can strengthen parent-child bonds and support healthy attachment relationships.

Read More

Read More Behavior Activation

Designing interactive media experiences that motivate and sustain positive behavior change in health, education, and social contexts.

Read More

Read More Environmental Education

Leveraging immersive technologies to create engaging environmental learning experiences that promote pro-environmental behaviors.

Read More

Read More Multisensory VR

Exploring haptic feedback technologies to create more immersive and accessible virtual reality experiences, enhancing learning through touch and tactile sensations.

Read More

Read More Trustworthy AI for Cyber-Physical Systems

This research area develops methodologies for the verification and validation of AI-controlled cyber-physical systems using simulations as virtual testbeds to explore edge cases that are impractical or too dangerous to test in the real world.

Read More

Read More VR and Health Communication

Leveraging extended reality (XR) platforms to develop and validate novel communication strategies for understanding psycho-behavioral components of complex medical procedures, enhancing medical self-efficacy and emotional preparedness.

Read More

Read More Featured Projects

Discover our groundbreaking research projects that are shaping the future of immersive technologies and their real-world applications.

Lexi Learn

Advancing Adaptive Literacy Learning in Immersive Environments

Lexi Learn explores how immersive virtual environments and artificial intelligence can be integrated into early literacy education through multisensory and adaptive design. Developed as a literacy-focused educational game, Lexi Learn transforms reading and spelling into an interactive and exploratory experience within a calm, nature-inspired virtual environment. The integration of adaptive and generative AI scaffolding allows the learning environment to respond dynamically to each learner's pace and progress, supporting differentiated and inclusive instruction.

Principal Investigator: Dr. Anna Queiroz

REboot

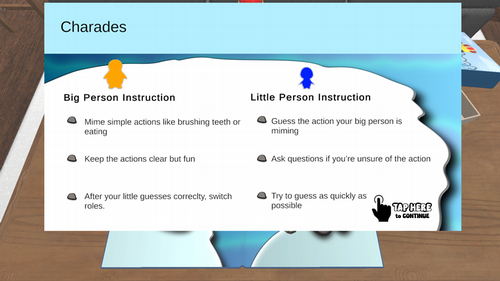

Mobile Game for Therapeutic Parent-Child Play

REboot builds on therapeutic modalities such as Child Parent Relationship Therapy, Filial Play Therapy, and Theraplay by developing a mobile game designed to facilitate therapeutic play between parents and children. The intervention takes the form of a digital board game where players roll a virtual die to move tokens, collecting points and unlocking rewards. Between rounds, parent-child dyads are prompted to engage in short, off-screen mini games—each carefully selected and reviewed by a panel of play therapists to enhance specific aspects of the parent-child relationship. The project currently has a working prototype and is actively recruiting participants.

Contact: Justin Jacobson

Digital Experiences for Behavior Activation

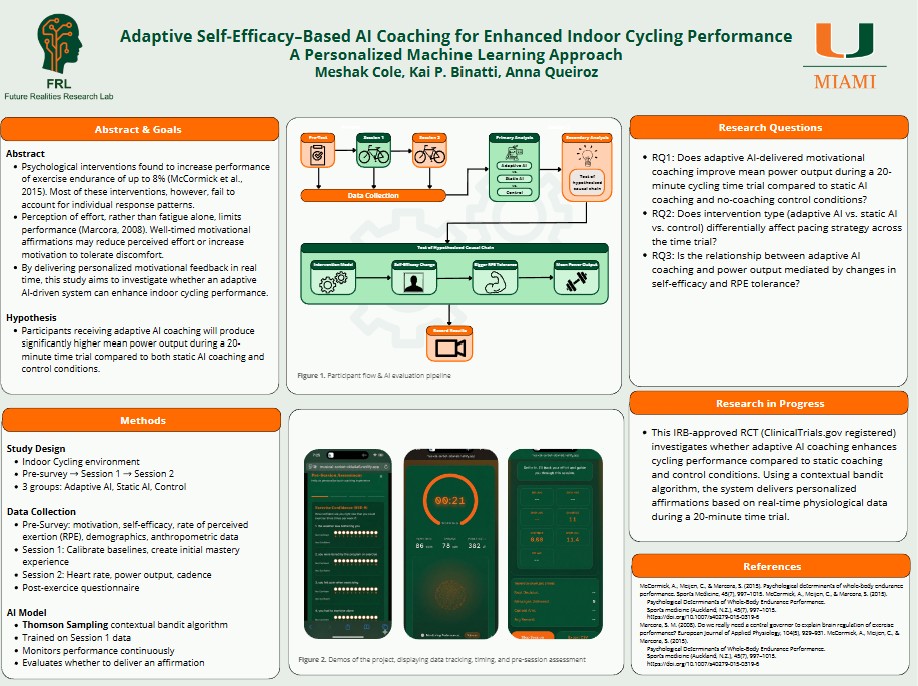

Adaptive AI Coaching for Enhanced Cycling Performance

Our project addresses a critical gap in endurance coaching by developing an adaptive AI coaching system that learns precisely when motivational support will be most effective for each individual cyclist. This innovative system uses machine learning to analyze real-time physiological data (like heart rate and power output patterns) and deliver personalized verbal affirmations at the optimal moment, aiming to reduce perceived exertion and increase the motivation to tolerate discomfort. This research is an important step in applying digital media and adaptive machine learning to real-time exercise coaching.

Principal Investigator: Dr. Anna Queiroz

Immersive Technologies in the Curriculum

The project aims to reduce the digital access gap between private and public schools, allowing the students to engage with immersive technologies while enhancing environmental education. A total of 33,000 middle and high school students from low-income and indigenous communities in Brazil participated in the project.

Learn More

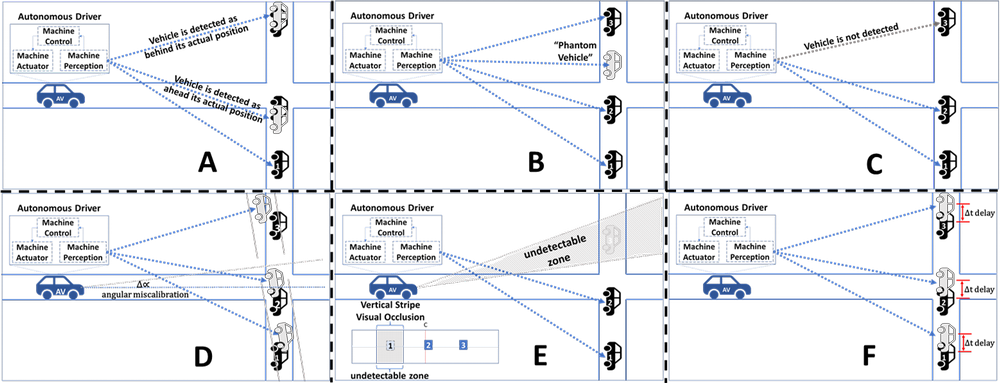

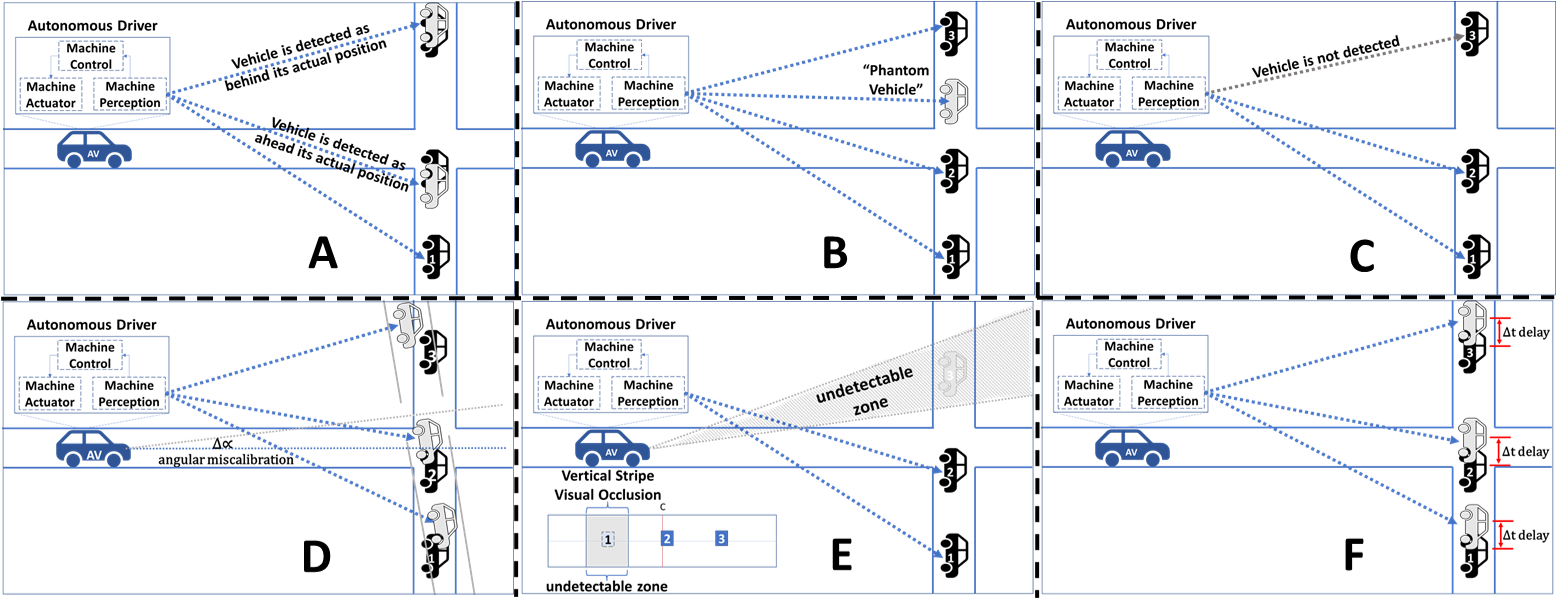

AV Perception Safety Testing

Testing Autonomous Vehicle Resilience to Perception Failures

Autonomous vehicle (AV) safety relies on the integrity of its perception systems. However, these systems are vulnerable to non-obvious failures, including perception failures, called as hallucinations. This creates a critical and often unmeasured safety gap, as these unpredictable hallucinations are not addressed by conventional verification and validations methods, which often assume a perfect or near-perfect perception stack.

Contact: Gabriel Shimanuki

RADIOACTIVE – Radiotherapy Patient Education with Virtual Reality

Immersive Storytelling for Oncology Patient Preparation

This research area explores the application of immersive technologies to improve patient understanding and emotional readiness for complex medical procedures. With a focus on oncology patients undergoing radiation therapy, this line of work investigates how immersive storytelling and virtual hospital walkthroughs can demystify the treatment environment and alleviate pre-treatment anxiety. By incorporating first-person narratives and visual simulations, the research aims to reduce patients' anxiety, increase treatment comprehension, and ultimately enhance patient satisfaction.

Principal Investigator: Prof. Crystal Chen

Radiotherapy Patient Education with Augmented Reality for Post-Treatment Recovery

AR Virtual Mirror for Recovery Visualization

This project utilizes augmented reality (AR) to enhance patient and caregiver understanding of post-radiotherapy recovery. By implementing a virtual mirror interface, the system overlays visual representations of treatment areas and their expected toxicity levels over time. Patients can visualize how specific regions of their body will respond and recover after treatment, offering a personalized and intuitive view of their healing process. The primary aim is to empower patients by improving their mental preparedness, fostering resilience, and building confidence during the recovery phase. Additionally, the AR system serves as an educational tool for family members and caregivers, helping them better understand the patient's experience and how to provide appropriate support.

Principal Investigator: Prof. Crystal Chen

Our Team

Meet the team driving innovation in future realities through interdisciplinary collaboration and cutting-edge research.

Publications & Research

Our team's research contributions and emerging work in immersive technologies, interactive media, and future realities applications.

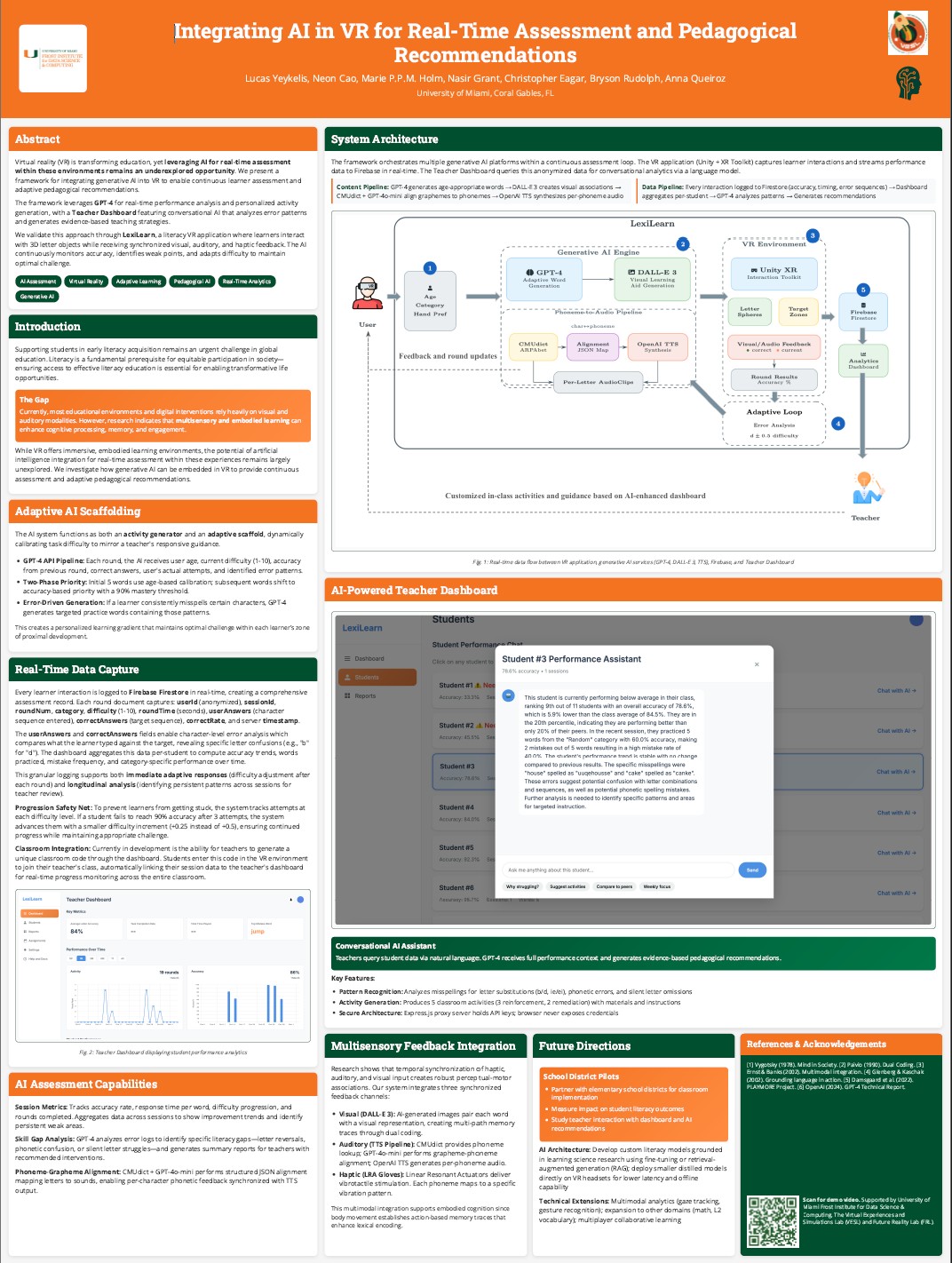

Integrating AI in VR for Real-Time Assessment and Pedagogical Recommendations

Miami XR 2026, University of Miami

A framework for integrating generative AI into VR to enable continuous learner assessment and adaptive pedagogical recommendations, validated through the LexiLearn literacy application.

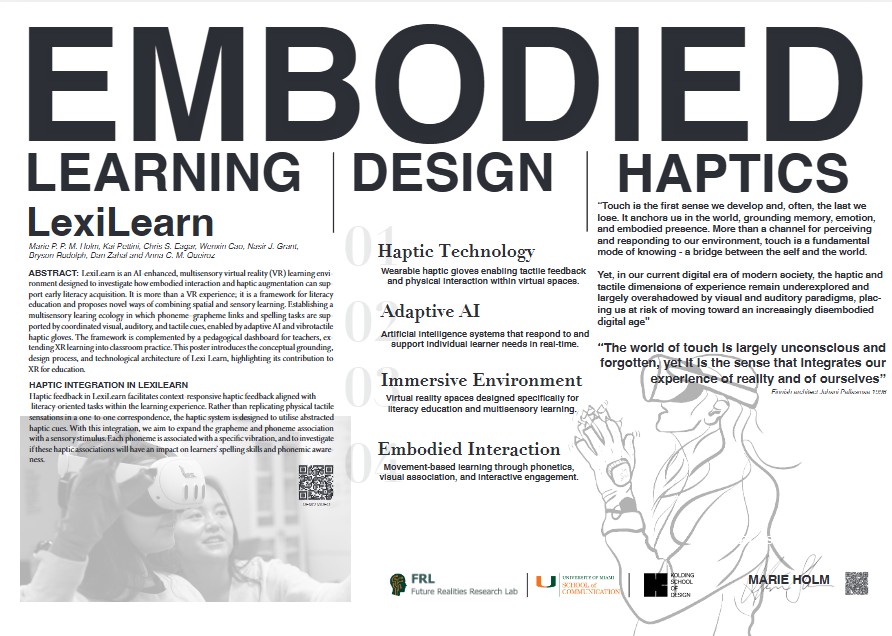

Embodied Learning, Design, and Haptics: LexiLearn

Miami XR 2026, University of Miami

An AI-enhanced, multisensory VR learning environment investigating how embodied interaction and haptic augmentation support early literacy acquisition through coordinated visual, auditory, and tactile cues.

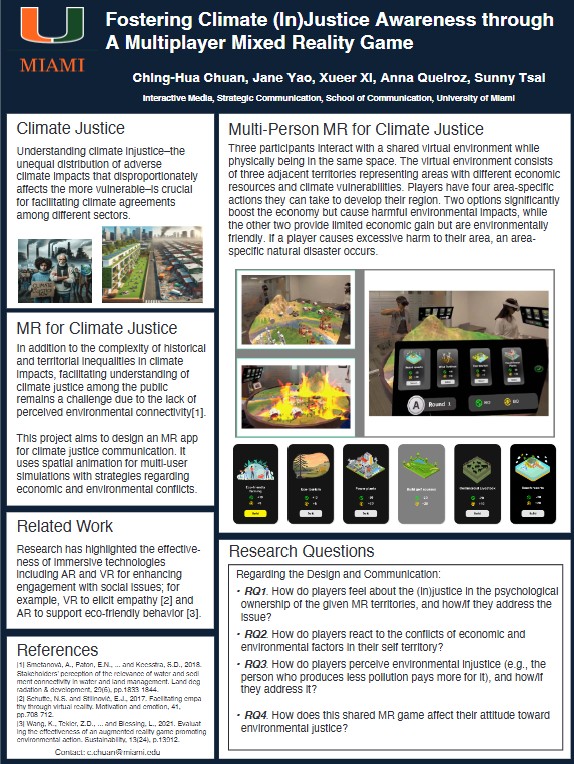

Fostering Climate (In)Justice Awareness through A Multiplayer Mixed Reality Game

Miami XR 2026, University of Miami

A multiplayer mixed reality game designed to foster awareness of climate injustice through collaborative, immersive gameplay and environmental storytelling.

Adaptive Self-Efficacy–Based AI Coaching for Enhanced Indoor Cycling Performance: A Personalized Machine Learning Approach

Miami XR 2026, University of Miami

An adaptive AI coaching system that uses machine learning to analyze real-time physiological data and deliver personalized motivational support to enhance indoor cycling performance.

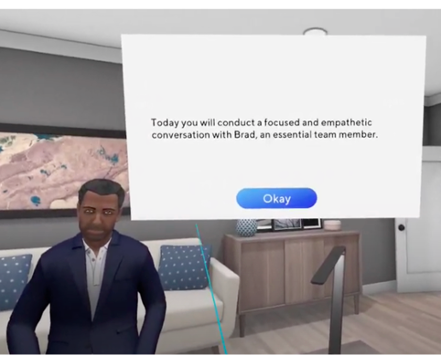

Self-review and feedback in virtual reality dialogues increase language markers of personal and emotional expression in an empathetic communication training experience

Computers & Education: X Reality, 7

This empirical study demonstrates how self-review and feedback mechanisms in virtual reality dialogues enhance language markers of personal and emotional expression in empathetic communication training experiences...

Virtual Exchange, Virtual Reality, and Conversational Artificial Intelligence in Global Health Courses: Building Empathy and Understanding in Humanitarian Response

International Virtual Conference Exchange. Greece

This study explores how virtual exchange, virtual reality, and conversational AI can be integrated in global health education to build empathy and understanding of humanitarian response...

How do immersive technologies influence cognitive load, memory retention, and knowledge transfer? Evidence from a multilevel meta-analysis

75th International Communication Association Conference. Denver, USA

A comprehensive multilevel meta-analysis examining the impact of immersive technologies on cognitive load, memory retention, and knowledge transfer processes...

Predicting and Understanding Turn-Taking Behavior in Open-Ended Group Activities in Virtual Reality

28th ACM SIGCHI Conference on Computer-Supported Cooperative Work & Social Computing (CSCW). Norway.

This research investigates turn-taking behavior patterns in open-ended group activities within virtual reality environments, providing insights for collaborative VR design...

An interactive-participatory session for exploring the potential of Immersive Virtual Reality for family therapy

EFTA-RELATES 2025. France

An innovative interactive-participatory session design that explores the potential of immersive virtual reality applications in family therapy contexts through collaborative inquiry methodologies...

Injecting hallucinations in autonomous vehicles: A component-agnostic safety evaluation framework

arXiv

This research presents a component-agnostic safety evaluation framework for autonomous vehicles, focusing on injecting and analyzing hallucinations to assess system robustness and safety protocols...